Master Data Management (MDM), according to Gartner, is a “technology-enabled discipline in which business and IT work together to ensure the uniformity, accuracy, stewardship, semantic consistency, and accountability of the enterprise’s official shared master data assets. Master data is the consistent and uniform set of identifiers and extended attributes that describe the core entities of the enterprise, including customers, prospects, citizens, suppliers, sites, hierarchies, and chart of accounts.”

Traditionally, organizations deployed MDM solutions on-premises i.e. installing, and maintaining them on their own servers and infrastructure. However, with the advent of cloud computing, a new option emerged: Cloud MDM.

This blog unravels the ‘What, Why, and How’ of Cloud MDM, emphasizing its advantages over conventional approaches.

What is Cloud MDM?

Cloud MDM solutions host and deliver services over the internet instead of on-premises. The design of cloud master data management aims to establish a centralized platform for data management, empowering organizations to attain heightened levels of consistency, accuracy, and completeness in their data. Cloud MDM is among the top 5 MDM trends in today’s digital realm.

Cloud MDM offers several benefits over traditional on-premises MDM, such as:

Lower cost: Cloud MDM eliminates the need for upfront capital expenditure on hardware, software, and maintenance. Cloud MDM also offers flexible pricing models, such as pay-as-you-go or pay-per-use, which can reduce the total cost of ownership.

Faster deployment: It can be deployed faster than traditional on-premises. They have prebuilt templates, connectors, and integrations, which can speed up the implementation process.

Easier management: It simplifies administration and maintenance, with cloud providers handling updates, patches, backups, and security. It also offers self-service capabilities, which can empower business users to access and manage their data.

Greater agility: Enabling faster changes and enhancements without downtime, Cloud MDM supports scalability and elasticity, adapting to changing data volumes and organizational demands.

How does Cloud MDM differ from Traditional On-Premises MDM?

While Cloud MDM and traditional on-premises MDM share the same goal of delivering high-quality and consistent data, they differ in several aspects, such as:

Architecture: Cloud MDM uses a multi-tenant architecture, while on-premises MDM relies on a single-tenant architecture, increasing costs.

Data storage: It stores data in the cloud, making it accessible from anywhere, whereas on-premises MDM restricts data access to the organization’s network.

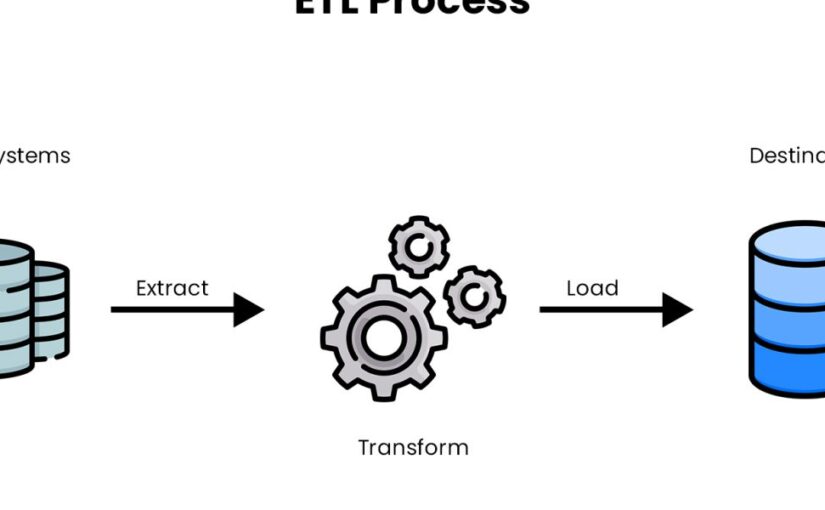

Data integration: Supports integration from various sources, including cloud applications, web services, social media, and mobile devices. Traditional MDM primarily integrates data from internal sources such as databases, ERP, CRM, and BI systems.

Data security: Relies on the cloud provider’s security measures, while on-premises MDM depends on the organization’s security measures.

Key Features of Cloud MDM

Cloud MDM solutions offer a range of features and functionalities to enable effective and efficient MDM, such as:

Data Centralization: Serves as a unified hub for housing all master data, consolidating details related to customers, products, suppliers, and various other entities into a singular repository. This system eradicates data silos and provides universal access to consistent and current data across the organization.

Data merging: Allows for the consolidation and reconciliation of data records from different sources into a single, golden form, which represents the most accurate and complete version of the entity.

Integration Capabilities: The seamless integration with various cloud-based services and enterprise systems. Ensuring accessibility wherever it is required, this interoperability elevates the overall utility of master data.

Data governance: Allows defining and enforcing the policies, roles, and workflows that govern the data lifecycle, such as creation, modification, deletion, and distribution.

Cloud-Based Security: Incorporate stringent security protocols, including encryption, data backup procedures, and adherence to industry standards and regulations. This safeguards data against potential threats and breaches.

Conclusion

As we conclude our exploration, it becomes evident that Cloud MDM is not just a modern approach to data management; it’s a strategic advantage. The advantages it offers, coupled with its distinct features, position Cloud MDM as a transformative force in the dynamic landscape of Master Data Management.

Artha Solutions is a Trusted Cloud MDM Implementation Service Provider

With a decade of expertise, Artha Solutions is a pioneering provider of tailored cloud Master Data Management (MDM) solutions. Our client-centric approach, coupled with a diverse team of certified professionals, ensures precision in addressing unique organizational goals. Artha Solutions goes beyond delivering solutions; we forge transformative partnerships for optimal cloud-based MDM success.